Using Jupyter Notebooks to Enable Exploration of Knoxville's Open Data

In this post I will be talking about a project I have been working on

to make knoxville’s opendata more accessible by converting formats

such as pdf, html, and REST apis to csv, json or geojson

files. Most importantly a Binder notebook is provided at this

link. For those not familiar with Binder notebooks: they are jupyter

notebooks that are currently hosted on Google Cloud for free! This

allows you to launch preconfigured interactive notebooks for anyone

and only require a web browser. Preconfigured is key because it allows

installation all of the tools necessary for analysis such as pandas,

load the data, and give some examples for motivation of questions that

can asked about the data. Since it is a fully programming environment

with 1 CPU and about 8 GB of RAM the sky is the limit on the work that

you can do.

Most important links:

I encourage contributions to the project and I will help anyone who would like to do a pull request. The project is fully open source (MIT License).

To me this providing this data in a more accessible format allowing for full exploration of the data is important. Without the data being available and easy to work with decisions based on the data can appear mysterious and out of your control. This will allow us to engage with our government and provide real metrics of progress. I think that this data is most empowering to students, journalists, small businesses, and activists/organizers. Catherine Devlin talked about this in detail at her keynote in PyCon.

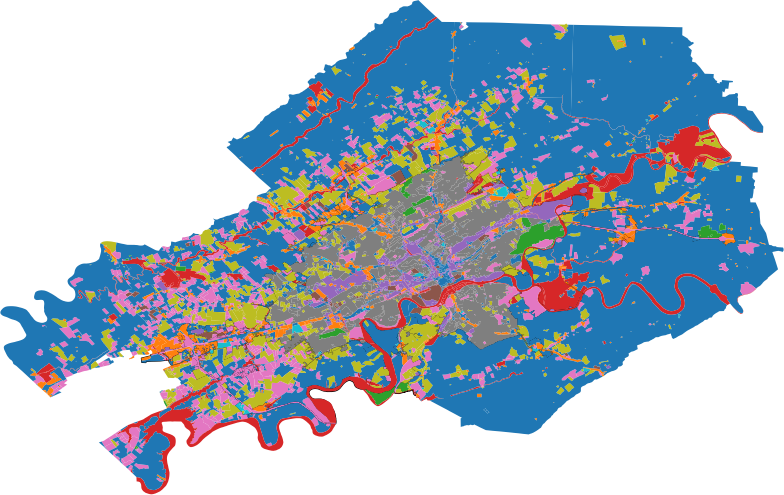

I was lucky enough to hear a talk from the city of knoxville about one

year ago and I am proud to say that they have made some serious

progress. The metropolitan planning commission’s work on providing GIS

data is significantly easier to work with than open knoxville data. I

can tell that they are focused on providing the data as opposed to

creating interactive dashboards of the data. I am so happy to hear

this. One of the biggest issues with parsing non trivial data formats

such as pdf, html, and undocumented REST APIs is the huge amount

of developer time put in per person to work with the data. This time

can be though of as a barrier to entry. For several of the datasets I

have parsed from pdf or REST apis to a csv format each took me

about 4-6 hours. All of the datasets are available in the data folder

of the repository.

The datasets included (as of June 2018):

- Greenways and Trails (

geojson) - Parks (

geojson) - Knoxville County Zoning (

geojson) - 311 call center metrics (

csv) - Crime dataset: type of crime, location, time, etc. (

csv) - Tree Inventory: current trees, future trees, location, species

(

csv) - Properties: ownership, prices, etc. (

csv)